- FutureIntelX

- Posts

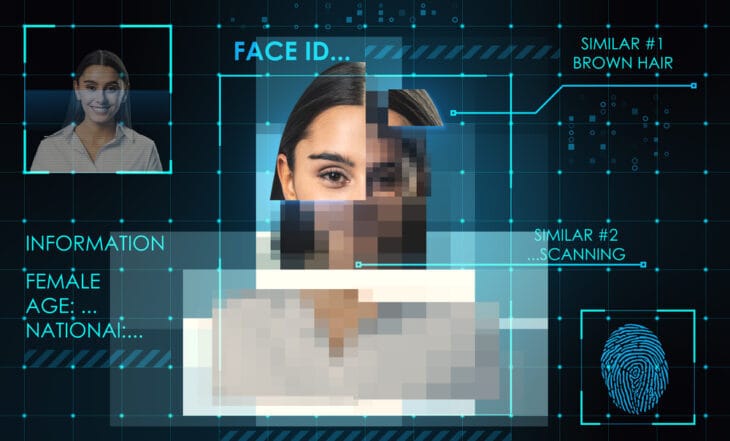

- Deepfake Fraud Surges: GenAI Enablers Fuel Sophisticated Scams Targeting Small Businesses.

Deepfake Fraud Surges: GenAI Enablers Fuel Sophisticated Scams Targeting Small Businesses.

Plus ↳ AI Fair‐Use Precedent Sent: Courts Favor Meta, Anthropic Copyright Risk Landscape Redefined.

Deepfake Fraud Surges: GenAI Enablers Fuel Sophisticated Scams Targeting Small Businesses.

Image source: GDPR.pl

TL;DR | Executive Intelligence Brief

U.S. courts have ruled that Anthropic and Meta’s training of LLMs on copyrighted books qualifies as “transformative fair use,” but stolen books usage remains infringing

Why It Matters: These verdicts provide legal cover for AI firms but also highlight limits, signaling pending liability risks and evolving regulation.

Who’s Impacted: AI companies, authors, publishers, tech investors, regulatory bodies, legal counsel.

Action Prompt: Map your data sources license tracks, pest if any piracy exposure, and prepare for granular audits now.

What’s New & Why It Matters

Generative AI makes scam creation easy deepfake employees trick finance clerks into transferring enormous funds, and cloned storefronts deceive customers at scale

Fraud is no longer a matter of chance it’s now programmable, efficient, and low-cost. Small and medium enterprises (SMEs) are emerging as prime targets due to limited defenses and high-trust customer bases.

This threat transcends industries from banking to healthcare to e-commerce requiring renewed focus on AI-resilient verification protocols across enterprise ecosystems.

Changing the Landscape

Structural Shifts:

Attack vectors now automate brand impersonation and audio/video trust breaches.

Defense systems lag, with detection platforms unintentionally contributing to adversarial data loops.

Sector Examples:

Finance: Deepfake calls prompting multi-million-dollar wire transfers.

E-commerce: Cloned storefront scams eroding consumer trust.

Emerging Models:

Ethical AI composability mandates real-time verification and multi-modal fraud detection.

Continuous brand watermarking and decentralized identity layers to authenticate digital trust.

Signal Drive

Directional Shift:

Trust will evolve from static identity toward dynamic authenticity moving from “who you are” to “how you behave in context.”

Next Enablers:

Adoption of biometric watermarks and encrypted challenge-response tools.

Deploying AI-for-AI defenses that learn attack patterns and respond adaptively.

Spin-Off Effects:

Insurance markets: Premiums will rise for SME identity risk.

Regulators: Will demand real-time fraud audits for AI threat management.

What Comes Next

Advancement Trajectory:

Expect investment in fraud-detection startups and regulatory pressure for mandatory verification standards within 6–12 months.

Stakeholder Alignment:

SMEs, security vendors, regulators will need to collaborate on real-time alert systems.

Trade bodies will introduce “trusted brand” certification mechanisms.

Ecosystem Emergence:

Adjacent sectors cyber insurance, authentication platforms, and trust-tech startups—will see rapid growth as a forced response.

Signalwatch Triggers

Company/Lab: Launch of real-time biometric voice/face watermark tech.

Policy/Regulation: New cross-border fraud standards from OECD or EU.

Dataset/Study: Public release of globally mapped GenAI scam incidence data.

If this deepfake fraud trend becomes mainstream, how will we need to redesign our authentication systems or phase out legacy trust models?

AI Fair‑Use Precedent Sent: Courts Favor Meta, Anthropic Copyright Risk Landscape Redefined.

Image source: Financial times

TL;DR | Executive Intelligence Brief

U.S. courts ruled in favor of Anthropic and Meta, affirming fair use in AI training.

Anthropic avoided copyright liability for training data, while Meta dodged dilution claims.

A new lawsuit targets Microsoft’s Megatron for pirated books fair use limits being tested.

This legal wave reshapes permissible data sources for AI developers worldwide.

What’s New & Why It Matters

U.S. federal judges determined that training AI models via large-scale ingestion of copyrighted texts qualifies under fair use, ruling for Anthropic and Meta in separate suits. They found insufficient market harm or dilution from AI outputs

Strategic Context:

These landmark rulings reinforce AI development but leave legal grey zones Anthropic still faces penalties for unauthorized book copying, and Microsoft is under backlash.

Global Relevance:

The U.S. standard tempts companies to rely on unlicensed data, but stricter regimes—in the UK, EU, India will face mounting pressure to join or diverge.

Changing the Landscape

Structural Shifts:

AI training feeds: Massive copyrighted corpora are now treated as fair use benchmarks.

License models: Commercial AI may sidestep licensing, shifting costs downstream.

Defense strategies: Fair-use reliance becomes primary legal play.

Sector Examples:

Publishing: Creative IP holders may lose leverage over dataset control.

Enterprise SaaS: Data platform providers could see rapid expansion with looser licensing norms

Emerging Models:

Attribution-first services where outputs are labeled with data provenance.

Compliance frameworks that trace dataset composition to levels of legal safety.

Signal Drive

Directional Shift:

From pre-emptive licensing toward post-hoc fair use justification AI players optimize speed over permission.

Next Enablers:

Development of audit trails and provenance tooling.

Adoption of dataset catalogs showing what was ingested and why.

Spin-Off Effects:

Insurance markets adjusting for data-origin risk.

Regional trade tension: Companies may opt for U.S. baselines, avoid stricter regimes.

What Comes Next

Advancement Trajectory:

Anticipate Microsoft’s case nearing resolution; if penalties are minimal, fair use becomes de facto industry standard in the U.S.

Stakeholder Alignment:

Content unions, publishers, and legislative bodies in the EU, UK, India will weigh in potential unilateral regulation expected.

Ecosystem Emergence:

Compliance-as-a-Service firms monitoring dataset legal hygiene.

Provenance APIs linking training data to licensing metadata.

Signalwatch Triggers

Company: Launch of “fair-use certified” AI datasets or training pipelines.

Policy: EU or UK mandating mandatory disclosure of training datasets.

Dataset/Study: Academic benchmarking of fair-use vs. licensed data performance.

If U.S. courts solidify fair-use precedence, how will we need to rearchitect training pipelines or decommission legacy licensing models?

How can we make FutureIntelX more valuable for you? |

Reply